Why Hasn't AI Revolutionized Our Workplaces Yet? 📉🤖

Explore why AI hasn't revolutionized workplaces yet. Uncover challenges of AI agents, chatbots, and LLMs in transforming the modern workplace.

If you’ve been paying attention to the hype around AI, you may have noticed the last year has been a slow one. In early 2023, right after chatGPT had been released, there was a wave of optimism. We heard about how the AI revolution was coming, the economy would change overnight, and some said humanity would be out of a job. This hype wasn’t just lip service; investors decided to put their money where their mouth is. The total investment in AI in the last 3 years has been a whopping $750 billion. The number of new AI startups in 2023 was more than double that of 2022.

In February 2023, Sam Altman posted on X:

"I think AI is going to be the greatest force for economic empowerment and a lot of people getting rich we have ever seen."

Yet a year on, hardly anything has changed. It’s not that the models aren’t impressive – we have seen some great model releases like GPT-4o, 3.5-Sonnet, Llama-3.1. For some reason, these AI models have barely made a dent in the workplace. A recent survey by Forbes suggests that instead of making work easier, AI is increasing employee workload and stress.

Things haven’t been going great for AI companies either. If you’ve been following the news around OpenAI, it is in all kinds of financial dire straits – it is reportedly on track to lose $5 billion in 2025 and is in danger of running out of cash. Many prominent start-ups such as Inflection AI, Stability AI, and Humane AI have suffered serious setbacks.

So what happened to the AI revolution we were promised? 🤔

To understand this, let us first learn what an AI agent is.

AI agents are entities that can perceive their environment, make decisions, and perform actions autonomously to achieve predefined goals. In other words, these agents autonomously generate real economic value.

Ok, that sounds impressive. But AI agents, apart from some impressive demos, have had little success in the real world.

This begs the question – why haven’t agents been able to replicate the success of chatGPT?

To answer this question, we need to first understand the key difference between chatbots and agents.

AI Chatbots vs AI Agents

Chatbots: Every response is inspected by the user. For instance, if I ask chatGPT “How do I book a trip to the Bahamas?” it will give me instructions on how to search flights, select the lowest prices, and choose suitable departure times.

Agents: Carries out multiple steps without interacting with the user. For the same task, the agent would autonomously figure out the cheapest flights, and the best timings, and complete the booking. It may prompt the user if there is additional info needed (e.g. dates), but otherwise, the user wouldn’t even be aware of its actions.

In other words, a Chatbot has a human-in-the-loop after N=1 actions. But an Agent has a human-in-the-loop after N=many actions.

Why is this a big deal? 🤨

Because AI models, despite all our progress, aren’t perfect. They still hallucinate and still get basic stuff wrong (“9.11 > 9.90”). Many people believe hallucinations are inherent to LLMs and will never go away.

And at work, a small amount of error makes all the difference. Let us draw an analogy:

Imagine you have a bot that enters 99% of the values in an Excel sheet correctly. Sounds like a pretty useful agent right?

Not quite. Because you don’t know where the 1% of errors are, you need to recheck the entire sheet. And at that point, you are better off just using a human to do the job!

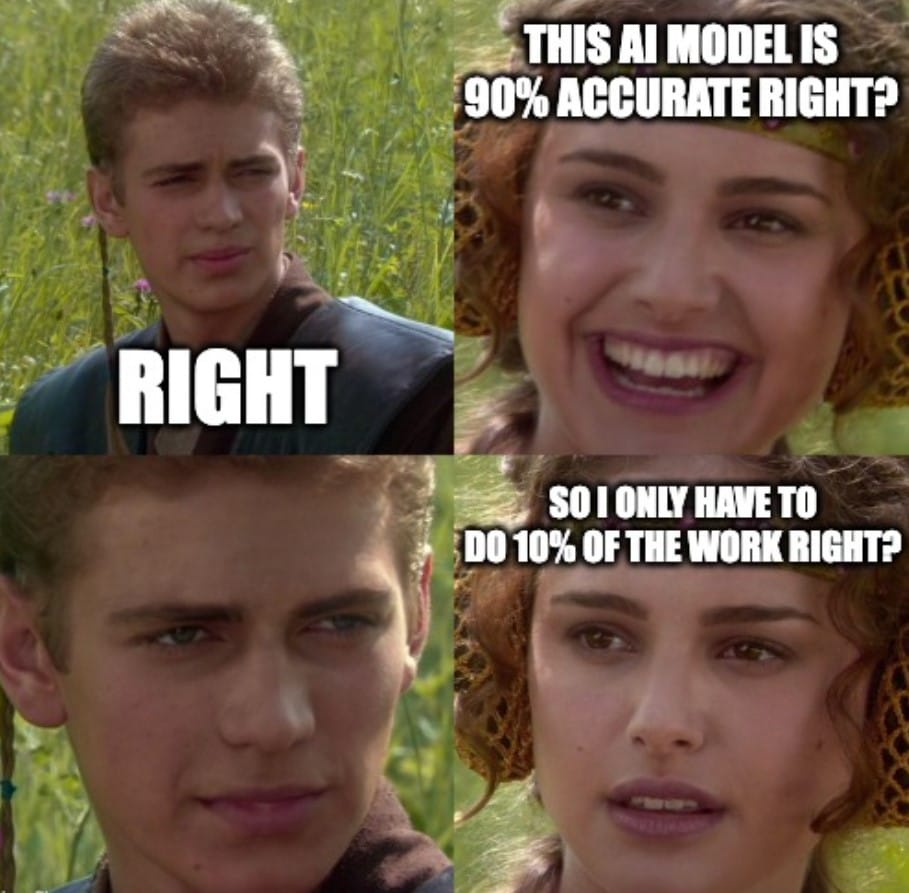

What makes AI different from humans a human gets 99.9% of the work right, whereas an AI model only gets ~90% of simple tasks right. Moreover, on challenging tasks like fixing software bugs, they are still under 20% accurate. With 99.9% accuracy, you can just ignore the 0.1% of failed tasks. This means your boss can trust you to get the job done correctly without having to redo it herself.

In the context of Chatbots vs Agents

When we use chatbots to assist us, we can easily fix the ~10% of erroneous responses before any harm is caused.

But with agents, a 10% error rate is a total disaster!

—Because these agents have to chain together dozens of simple tasks, the overall accuracy is likely to be ~ 5-10%.

—Moreover, agents perform economically important tasks that are much more sensitive to mistakes!

Yet another advantage humans have is that current AI models can fail in mysterious and unpredictable ways. (Andrej Karpathy has named this phenomenon Jagged intelligence). This means AI-committed errors are harder to catch downstream.

Can we increase the accuracy of AI Agents? 🧐

Let’s look at some popular ideas that have been proposed for improving AI agents.

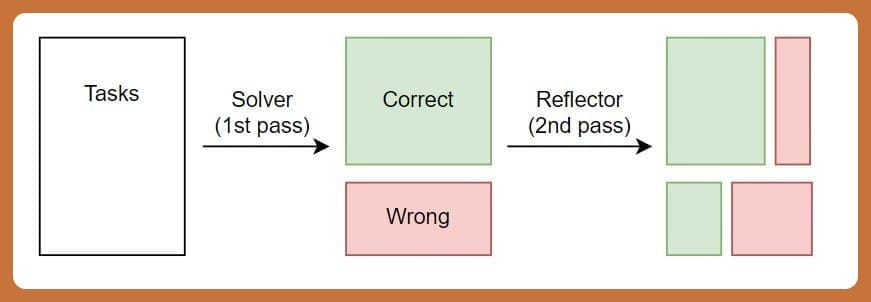

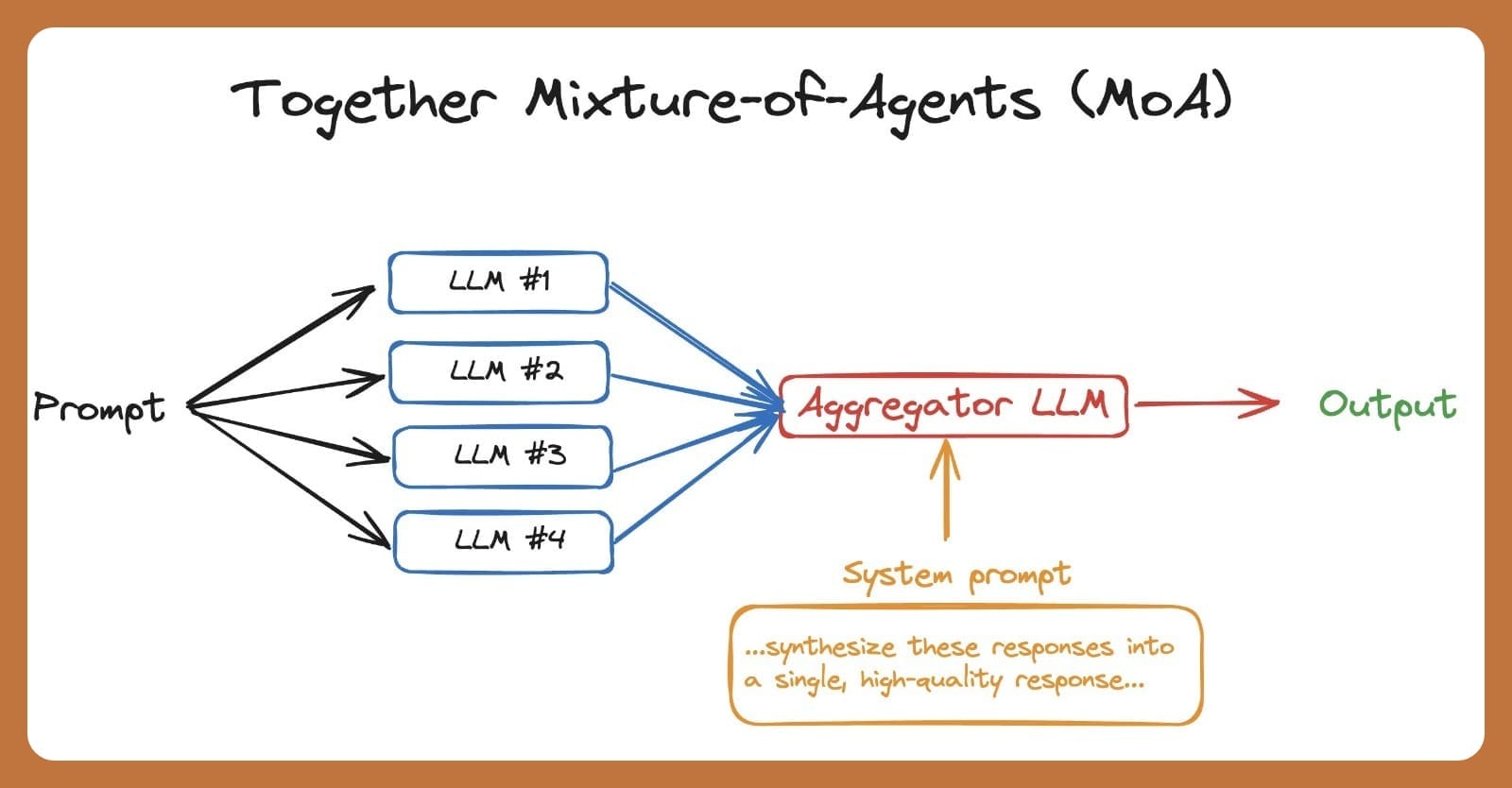

Proposal 1: Use two agents (or two passes). The first one generates an initial output, and the second agent fixes errors and generates a more accurate solution. So the final error rate is 10% times 10%, i.e. only 1%!

Drawback: Unfortunately this doesn’t work, because LLM verifiers are just as error-prone as generators. For every error it fixes, it is likely to mess something up that wasn’t wrong in the first place. Some studies even find that reflection can make output worse!

LLMs are also not great at fixing their own mistakes, even when they do catch them. The result is that while some mistakes are fixed, other new ones appear, and the overall accuracy doesn’t increase.

Proposal 2:

Let’s generate 20 different outputs and choose the best one. Surely this will improve things?

Problem:

Even if you could do this, it would impose a huge additional cost in terms of time and expense. And indeed, research shows that this does give a small boost in accuracy. But here are the issues:

- Except in objective-style questions, where you can take a simple majority vote, choosing the best answer is non-trivial. We can use an LLM to make this choice but it will have its error rate.

- Even in objective-style questions, majority-voting only works when the right answer has a higher probability than any wrong hallucination. This is not always the case.

Ultimately, we can't increase the accuracy as such. If it did, we would have achieved AGI last year 🙂

What lessons can we learn from this? ✍️

So do all the facts discussed above mean economic applications of LLMs are doomed? 😞

Not quite. However, the key ingredient required to be successful is humility. Focus on applications that have a human in the loop, if not after N=1 actions, then after a small number of steps, which an LLM can manage. The human operator can fix mistakes with a small amount of effort, removing the need to hire an extra worker.

Some applications of this type are

- Sales Development Reps

- Customer service

- Compliance

- Law clerks

- Coding assistants

Our approach to AI agents at Floworks 🤖💼

Of course, we at Floworks are keenly involved in building AI agents.

But how can we succeed in this tricky domain?

We believe these 3 key facts will help us:

Humans in the loop:

As we saw, human oversight is key to deploying today’s agents productively. We are working in Sales – an activity that is intrinsically built around humans.

Limited degrees of freedom:

Our AI SDR performs a modest number of intelligent tasks – reach out to a prospect, answer product FAQs, set up a meeting – before handing control back to a human. This means that the hardest parts of the sales operation – which includes building a personal connection – are still managed by humans.

Technology:

We are powered by a proprietary ThorV2 architecture which is highly proficient in using business tools like CRMs, Calendars, and Emails.

So what do you think? Is 2025 going to be the “year of AI agents” as Andrew Ng predicted, or are true agents many years away? Share your thoughts in the comments.